You didn't miss Part I, at least not here you didn't.

Many of you know Mike Riley. If you don't, here's a little history. He's the past president of ODTUG (for like 37 years or something) and for the last two years, he's served as Conference Chair for Kscope. Yeah, that doesn't really follow, but you know I'm a bit...scattered.

Did you read the link above? OK, well, here's the skinny. Mike has rectal cancer. Stage III. If it weren't for the stupid cancer part, the jokes would abound. Oh wait, they do anyway. Mike was diagnosed shortly after #kscope13, right around his 50th birthday (Happy Birthday Mike, Love, Cancer!). Ugh. (I want to say, "are you shittin' me?" see what I mean about the jokes? I can't help myself, I'm 14). Needless to say, cancer isn't really a joke. We all know someone affected by it. It is...well, it's not fun.

Go read his post if you haven't already. I'm going to give my version of that story. I'll wait...

So, Sunday morning, Game 3 of the World Series went to the Cardinals in a very bizarre way. I was watching highlights that morning as I had missed the end of the game (doesn't everyone know that I'm old and can't stay up that late to watch baseball?). Highlights. Mike lives in St. Louis. He's a Cardinal's fan. Wouldn't it be cool if he and his family could go to the game (mostly just his family, I don't like Mike that much). So I make some phone calls to see what people think of my idea. My idea is met with resistance. OK, I'll skip the people. Let's call Lisa (Mike's wife).

Apparently Sunday's are technology free days in the Riley household, no response. I go for a bike ride, but I take my phone, just in case Lisa calls me back. After the halfway point, my phone rings, I jump off the bike to answer.

So I talked to Lisa about my idea, can Mike handle the chaos of a World Series game?

We hang up and she goes to work. BTW, I asked her to keep my name out of it, but she didn't. We'll have words about that in the future.

She calls back (I think, it may have been over text, 2 weeks is an eternity to me). "He doesn't think he can do it."

So I call Mike directly (Lisa had already spoiled the surprise.)

"What about Box seats? You know, where the people with top hats and monocles sit? Away from the rift-raft, much more comfortable and free food and beer."

Backstory. Mike had finished his first round of chemo less than a week before Sunday. To make things worse, he decided it was a good time to throw out his back. He wasn't in the best of shape.

Mike said he thought he could do it.

OK, nay-sayers aside, let's see what we can do. I emailed approximately 50 people, mostly ODTUG people; board members, content leads, anyone I had in my address book. "Hey, wouldn't it be great to send Mike and his family to Game 5 of the World Series? We need to do this quick, tickets will probably double in price tonight especially if the Cardinals win." (that would mean Game 5 would be a clincher for the Cardinals, at home, muy expensive).

Within about 20 minutes, a couple of people pledged $600.

Holy shit!

At the prices I had seen, I was hoping to get between $50 and $100 from 50 people, hoping. I had $600 already. Game starts. Now it's up to $1100 in pledges. Holy shit, Part II. This might just be possible. Another 30 minutes and were about an hour into Game 4. Ticket prices have already gone up by $250 a ticket. Given that maybe 4 people have responded and I have $1600 in pledges, I pull the trigger. I bought 4 box seat tickets for the Riley family. (I had to have a couple of beers because I was about to drop a significant chunk of change without actual cash in hand, I could be out a lot of money, liquid courage is awesome).

Tickets sent to the Riley family. Pretty good feeling.

Like I said, I was confident, but I was scared. Before the end of the night though, there was over $5K pledged to get Mike and family to Game 5. Holy shit, Part III.

By midday Monday, pledges were well over $7K. I'll refer you back to Mike's post for more details. Shorter: jerseys for the family and a limo to the game.

Here's the breakdown: 35 people pledged, and paid, $8,080. Holy shit, Part IV. Average donation was $230.86. Median was $200. Low was $30 and high was $1000. Six people gave $500 or more. Nineteen people gave $200 or more. The list is a veritable Who's Who in the Oracle community.

Tickets + Jerseys + Limo = $6027.76

Riley family memory = Priceless.

So, what happened to the rest? Well, they have bills. Lots of bills. With the remainder, $2052.24, we paid off some hospital bills of $1220.63. There is currently $831.61 that will be sent shortly. It doesn't stop there though. Cancer treatment is effing expensive. Mike has surgery in December. He'll be on bed rest for some time. His bed is 17 years old. He needs a new one. After that, more chemo and more bills.

"Hey Chet, I'd love to help the Riley family out, can I give you my money for them?"

Yes, absolutely. Help me help them. I started a GoFundMe campaign. Goal is $10K. Any and all donations are welcome. Gifts include a thank you card from the Riley family and the knowledge that you helped out a fellow Oracle (nerd, definitely a nerd) in need. You can find the campaign here.

If you can't donate money, I've also created a hashtag so that we can show support for Mike and his family. It's #fmcuta (I'll let you figure out what it means). Words of encouragement are welcome and appreciated.

Thank you to the 35 who have already so generously given. Thank you to the rest of you who will donate or send out (rude) tweets.

Tuesday, November 12, 2013

Wednesday, November 6, 2013

Fun with SQL - My Birthday

This year is kind of fun, my birthday is on November 12th (next Tuesday, if you want to send gifts). That means it will fall on 11/12/13. Even better perhaps, katezilla's birthday is December 13th. 12/13/14. What does this have to do with SQL?

Someone mentioned to me last night that this wouldn't happen again for 990 years. I was thinking, "wow, I'm super special now (along with the other 1/365 * 6 billion people)!" Or am I? I had to do the math. Since date math is hard, and math is hard, and I'm good at neither, SQL to the rescue.

That query gives me this:

Someone mentioned to me last night that this wouldn't happen again for 990 years. I was thinking, "wow, I'm super special now (along with the other 1/365 * 6 billion people)!" Or am I? I had to do the math. Since date math is hard, and math is hard, and I'm good at neither, SQL to the rescue.

select to_number( to_char( sysdate + ( rownum - 1 ), 'mm' ) ) month_of, to_number( to_char( sysdate + ( rownum - 1 ), 'dd' ) ) day_of, to_number( to_char( sysdate + ( rownum - 1 ), 'yy' ) ) year_of, sysdate + ( rownum - 1 ) actual from dual connect by level <= 100000(In case you were wondering, 100,000 days is just shy of 274 years. 273.972602739726027397260273972602739726 to be more precise.)

That query gives me this:

MONTH_OF DAY_OF YEAR_OF ACTUAL -------- ------ ------- ---------- 11 06 13 2013/11/06 11 07 13 2013/11/07 11 08 13 2013/11/08 11 09 13 2013/11/09 11 10 13 2013/11/10 11 11 13 2013/11/11 ...So how can I figure out where DAY_OF is equal to MONTH_OF + 1 and YEAR_OF is equal to DAY_OF + 1? In my head, I thought it would be far more complicated, but it's not.

select *

from

(

select

to_number( to_char( sysdate + ( rownum - 1 ), 'mm' ) ) month_of,

to_number( to_char( sysdate + ( rownum - 1 ), 'dd' ) ) day_of,

to_number( to_char( sysdate + ( rownum - 1 ), 'yy' ) ) year_of,

sysdate + ( rownum - 1 ) actual

from dual

connect by level <= 100000

)

where month_of + 1 = day_of

and day_of + 1 = year_of

order by actual asc

Which gives me:

MONTH_OF DAY_OF YEAR_OF ACTUAL -------- ------ ------- ---------- 11 12 13 2013/11/12 12 13 14 2014/12/13 01 02 03 2103/01/02 02 03 04 2104/02/03 03 04 05 2105/03/04 04 05 06 2106/04/05 05 06 07 2107/05/06 ...OK, so it looks closer to 100 years, not 990. Let's subtract. LAG to the rescue.

select

actual,

lag( actual, 1 ) over ( partition by 1 order by 2 ) previous_actual,

actual - ( lag( actual, 1 ) over ( partition by 1 order by 2 ) ) time_between

from

(

select

to_number( to_char( sysdate + ( rownum - 1 ), 'mm' ) ) month_of,

to_number( to_char( sysdate + ( rownum - 1 ), 'dd' ) ) day_of,

to_number( to_char( sysdate + ( rownum - 1 ), 'yy' ) ) year_of,

sysdate + ( rownum - 1 ) actual

from dual

connect by level <= 100000

)

where month_of + 1 = day_of

and day_of + 1 = year_of

order by actual asc

Which gives me:

ACTUAL PREVIOUS_ACTUAL TIME_BETWEEN ---------- --------------- ------------ 2013/11/12 2014/12/13 396 2103/01/02 32161 2104/02/03 397 2105/03/04 395 2106/04/05 397 2107/05/06 396 2108/06/07 398 2109/07/08 396 2110/08/09 397 2111/09/10 397 2112/10/11 397 2113/11/12 397 2114/12/13 396 2203/01/02 32161So, it looks like every 88 years it occurs and is followed by 11 consecutive years of matching numbers. The next time 11/12/13 and 12/13/14 will appear is in 2113 and 2114. Yay for SQL!

Tuesday, October 29, 2013

Why I'm voting for Danny Bryant and You Should Too

I'm talking about the ODTUG Board of Directors.

This.

That's really all you need isn't it?

Fine.

Today wraps up the voting period for the ODTUG Board of Directors. If you're asking me what ODTUG is, stop reading now. If you are a member of ODTUG, then please give me a few minutes to pontificate (that's a word I heard Jeff Smith use once, hopefully it makes sense here).

Your favorite Oracle conference, KScope, is largely successful based on the efforts of the Board, along with the expert advice of the YCC group. In addition, if you think ODTUG should "do more with Essbase" or "charge more for memberships" these decisions are made and carried out by the board.

So if you like being in ODTUG, and you want to help it get better and grow, and be as awesome as possible, you only need to do one thing today. Go vote. Midnight tonight (10/29) is the deadline. Do it.

You get to vote for several people. I suggest you read their bios. I'll save you the time for at least one vote, and that's for Danny Bryant.

Besides that awesome photo (#kscope12 in San Antonio) up above, here are several more reasons.

1. He's into everything. OBIEE. EBS. Essbase. SQL Developer. Database. Not very many people have their hands in everything, he does. He will be able to represent the entire spectrum of ODTUG members.

2. He's a fantastic human being. It's not just because he takes pictures of himself wearing ORACLENERD gear everywhere (doesn't hurt though), he's just, awesome.

3. This (Part II)

4. He also always answers the phone, tweets, and emails I send him. He might be sick, or he might just be that responsive. The ODTUG Board member responsibilities will fit nicely on his shoulders I believe.

So go vote. Now.

This.

That's really all you need isn't it?

Fine.

Today wraps up the voting period for the ODTUG Board of Directors. If you're asking me what ODTUG is, stop reading now. If you are a member of ODTUG, then please give me a few minutes to pontificate (that's a word I heard Jeff Smith use once, hopefully it makes sense here).

Your favorite Oracle conference, KScope, is largely successful based on the efforts of the Board, along with the expert advice of the YCC group. In addition, if you think ODTUG should "do more with Essbase" or "charge more for memberships" these decisions are made and carried out by the board.

So if you like being in ODTUG, and you want to help it get better and grow, and be as awesome as possible, you only need to do one thing today. Go vote. Midnight tonight (10/29) is the deadline. Do it.

You get to vote for several people. I suggest you read their bios. I'll save you the time for at least one vote, and that's for Danny Bryant.

Besides that awesome photo (#kscope12 in San Antonio) up above, here are several more reasons.

1. He's into everything. OBIEE. EBS. Essbase. SQL Developer. Database. Not very many people have their hands in everything, he does. He will be able to represent the entire spectrum of ODTUG members.

2. He's a fantastic human being. It's not just because he takes pictures of himself wearing ORACLENERD gear everywhere (doesn't hurt though), he's just, awesome.

3. This (Part II)

4. He also always answers the phone, tweets, and emails I send him. He might be sick, or he might just be that responsive. The ODTUG Board member responsibilities will fit nicely on his shoulders I believe.

So go vote. Now.

Monday, October 21, 2013

Excel VS BI in Financial Analysis: why the fight was over before it started

by Victor Fagundo

The argument over why Businesses should abandon Excel in favor of more structured tools has been raging for as long as I have had more than a casual exposure to Oracle products. From the standpoint of an IT user Excel appears to be a simplistic, flat-file-based, error-prone tool that careless people use, despite its obvious flaws. Petabytes of duplicative Excel spreadsheets clog network drives across the globe; we as IT users know it, and it drives us crazy. Why, oh why, can’t these analysts, project managers, and accountants not grasp the elegant beauty of a centralized database solution that ensures data integrity, security, and has the chops to handle gobs of data, and abandon their silly Excel sheets?

I’ll tell you why: Excel is better. Excel the most flexible and feature-rich tool for organizing and analyzing data. Ever. Period.

For the past few years I have lived in a hybrid Finance/IT role, and in coming from IT, I was shocked at how much Excel was used, for everything. But after working with Excel on a daily basis for several years, I am a convert. An adept Excel user can out-develop any tool ( BI, Apex, Hyperion, Crystal Reports ) handily. (when dataset size is not an issue). Microsoft has done too much work on Excel, made it too extendable, too intuitive, built in so much, that no structured tool like BI, APEX, SAP, Hyperion will EVER catch up to its usability/flexibility.

Take this real-world example that came across my desk a few months ago: for a retail chain define a by-week, by-unit sales target, and create a report that compares actual sales to this target. Oh, and the weekly sales targets get adjusted each quarter based on current financial outlook.

How quickly could you turn around a DW/BI solution to this problem? What would it involve?

• Create table to house targets

• Create ETL process to load new targets

• Define BMM/Presentation Layers to expose targets

• Develop / test / publish report.

A day? Maybe? If one person handled all steps (unlikely, since the DB layers and RPD layers are probably handled by different people.)

I can tell you how long it took me in Excel: 3 hours (OBIEE driven data-dump, married with target sheet supplied to me). I love OBIEE, but Excel was still miles faster/more efficient for this task. And I could regurgitate 6 other examples like this one off at a moment’s notice.

Case in point: 95% of the data that C-level executives use to make strategic decisions is Excel based.

If you’ve ever sat in on a presentation to a CEO or other C-level executive at any medium to large sized company, you know that people are not bringing up dashboards, or any other applications. They are presenting PowerPoints with a few (less than 7) carefully massaged facts on them. If you trace the source of these numbers back down the rabbit hole, your first stop is always Excel. Within these Excel workbooks you will find “guesses” and “plugs” that fill gaps in solid data, to arrive at an actionable bit of information. It’s these “guesses” and “plugs” that are very hard to code for in an environment like OBIEE (or any other application). Can it be done? Yes, of course, with gobs of time and money. And during the fitful and tense development, the creditably of the application is going to take major hits.

Given the above, the usefulness of OBIEE might seem bleak. But I strongly feel that applications such as OBIEE do have a proper place in the upper organizational layers of modern business: Facilitating the Tactical business layers, and providing data-dumps to the Strategic Business layers.

Since this post is mainly about Excel, I will focus on how OBIEE can support the analyses that are inevitably going to be done in Excel.

Data Formatting, Data Formatting, FORMATTING!! I can’t stress this enough. For an analyst, having to re-format numbers that come out of an export so that you can properly display them or drive calcs off them in Excel is infuriating, and wasteful. My favorite examples: in a BI environment I worked with percentages were exported as TEXT, so while they looked fine in the application, as soon as you exported them to Excel and built calcs off them, your answer was overstated by a factor of 100 (Excel understood “75%” to be the number 75 with a text character appended, not the number 0.75).

Ask your users how they would like to SEE a fact in Excel: with decimals or not? With commas or not? Ensure that when exported to Excel, facts and attributes function correctly.

“Pull” refreshes of information sources in Excel. In the finance world, most Excel workbooks are low to medium complexity financial models, based off a data-dump from a reporting system. When the user wants to refresh the model, they refresh the data-dump, and the Excel calculations do the rest. OBIEE currently forces a user to “push” a new data-dump by manually running/exporting from OBIEE and then pasting the data into the data-dump tab in the workbook. What an Excel user really desires is to have a data dump that can be refreshed automatedly, using values that exist on other parts of the workbook to define filters of data-dump. Then all the user needs to do is trigger a “pull” and everything else is automated. Currently OBIEE has no solution to this problem that is elegant enough for the common Excel user. (Smartview must have its filters defined explicitly in the Smartview UI each time an analysis is pulled.)

The important part to take away from these 2 suggestions, and this entire post, is that to maximize the audience of OBIEE, we must acknowledge that Excel is the preferred tool of the Finance department, due to its flexibility, and support friendly exports to Excel as a best practice. We must also understand that accounting for this flexibility in OBIEE is daunting, and probably not the best use of the tool. If your users are asking for a highly complex attribute or fact, that is fraught with exceptions and estimations, chances are they are going to be much happier if what you give them is reliable information in a data-dump form, and allow them to handle the exceptions and estimations in Excel.

The argument over why Businesses should abandon Excel in favor of more structured tools has been raging for as long as I have had more than a casual exposure to Oracle products. From the standpoint of an IT user Excel appears to be a simplistic, flat-file-based, error-prone tool that careless people use, despite its obvious flaws. Petabytes of duplicative Excel spreadsheets clog network drives across the globe; we as IT users know it, and it drives us crazy. Why, oh why, can’t these analysts, project managers, and accountants not grasp the elegant beauty of a centralized database solution that ensures data integrity, security, and has the chops to handle gobs of data, and abandon their silly Excel sheets?

I’ll tell you why: Excel is better. Excel the most flexible and feature-rich tool for organizing and analyzing data. Ever. Period.

For the past few years I have lived in a hybrid Finance/IT role, and in coming from IT, I was shocked at how much Excel was used, for everything. But after working with Excel on a daily basis for several years, I am a convert. An adept Excel user can out-develop any tool ( BI, Apex, Hyperion, Crystal Reports ) handily. (when dataset size is not an issue). Microsoft has done too much work on Excel, made it too extendable, too intuitive, built in so much, that no structured tool like BI, APEX, SAP, Hyperion will EVER catch up to its usability/flexibility.

Take this real-world example that came across my desk a few months ago: for a retail chain define a by-week, by-unit sales target, and create a report that compares actual sales to this target. Oh, and the weekly sales targets get adjusted each quarter based on current financial outlook.

How quickly could you turn around a DW/BI solution to this problem? What would it involve?

• Create table to house targets

• Create ETL process to load new targets

• Define BMM/Presentation Layers to expose targets

• Develop / test / publish report.

A day? Maybe? If one person handled all steps (unlikely, since the DB layers and RPD layers are probably handled by different people.)

I can tell you how long it took me in Excel: 3 hours (OBIEE driven data-dump, married with target sheet supplied to me). I love OBIEE, but Excel was still miles faster/more efficient for this task. And I could regurgitate 6 other examples like this one off at a moment’s notice.

Case in point: 95% of the data that C-level executives use to make strategic decisions is Excel based.

If you’ve ever sat in on a presentation to a CEO or other C-level executive at any medium to large sized company, you know that people are not bringing up dashboards, or any other applications. They are presenting PowerPoints with a few (less than 7) carefully massaged facts on them. If you trace the source of these numbers back down the rabbit hole, your first stop is always Excel. Within these Excel workbooks you will find “guesses” and “plugs” that fill gaps in solid data, to arrive at an actionable bit of information. It’s these “guesses” and “plugs” that are very hard to code for in an environment like OBIEE (or any other application). Can it be done? Yes, of course, with gobs of time and money. And during the fitful and tense development, the creditably of the application is going to take major hits.

Given the above, the usefulness of OBIEE might seem bleak. But I strongly feel that applications such as OBIEE do have a proper place in the upper organizational layers of modern business: Facilitating the Tactical business layers, and providing data-dumps to the Strategic Business layers.

Since this post is mainly about Excel, I will focus on how OBIEE can support the analyses that are inevitably going to be done in Excel.

Data Formatting, Data Formatting, FORMATTING!! I can’t stress this enough. For an analyst, having to re-format numbers that come out of an export so that you can properly display them or drive calcs off them in Excel is infuriating, and wasteful. My favorite examples: in a BI environment I worked with percentages were exported as TEXT, so while they looked fine in the application, as soon as you exported them to Excel and built calcs off them, your answer was overstated by a factor of 100 (Excel understood “75%” to be the number 75 with a text character appended, not the number 0.75).

Ask your users how they would like to SEE a fact in Excel: with decimals or not? With commas or not? Ensure that when exported to Excel, facts and attributes function correctly.

“Pull” refreshes of information sources in Excel. In the finance world, most Excel workbooks are low to medium complexity financial models, based off a data-dump from a reporting system. When the user wants to refresh the model, they refresh the data-dump, and the Excel calculations do the rest. OBIEE currently forces a user to “push” a new data-dump by manually running/exporting from OBIEE and then pasting the data into the data-dump tab in the workbook. What an Excel user really desires is to have a data dump that can be refreshed automatedly, using values that exist on other parts of the workbook to define filters of data-dump. Then all the user needs to do is trigger a “pull” and everything else is automated. Currently OBIEE has no solution to this problem that is elegant enough for the common Excel user. (Smartview must have its filters defined explicitly in the Smartview UI each time an analysis is pulled.)

The important part to take away from these 2 suggestions, and this entire post, is that to maximize the audience of OBIEE, we must acknowledge that Excel is the preferred tool of the Finance department, due to its flexibility, and support friendly exports to Excel as a best practice. We must also understand that accounting for this flexibility in OBIEE is daunting, and probably not the best use of the tool. If your users are asking for a highly complex attribute or fact, that is fraught with exceptions and estimations, chances are they are going to be much happier if what you give them is reliable information in a data-dump form, and allow them to handle the exceptions and estimations in Excel.

Monday, September 16, 2013

#OOW13

I'm going to be busy.

Here's my list of events:

Here's my list of events:

- Saturday

- I arrive in San Francisco on Saturday around 1 PM. If you're arriving at around the same time, let me know, we can share a cab into the city.

- Beer. After arriving I plan on finding a very cold Pliny the Elder. Or three.

- ODTUG Dinner. I'm crashing this one. It's Board members only to my knowledge and until someone says I cannot go (especially if fueled by more than one Pliny the Elder), I'm going.

- Sunday

- Open World Bridge Run. Not sure if I can make it, but I'm going to try. I'm presenting at 10:15 so it will be a tough decision.

- 10:30 to 11:30. Thinking Clearly About Performance. Somehow I managed to con Cary Millsap into a duet of sorts. I have him convinced it is the other way around. Either way, it should be fun (I am not nervous!).

- 2:15 to 4:30, Software Development in the Oracle Ecosystem, Part I and Part II. I'm moderating the aforementioned Mr. Millsap, Sten Vesterli, Markus Eisele and Jerry Brenner (My first boss was scheduled to speak as well, but he had a last minute change of plans, jerk).

- Oracle ACE Dinner. Evening.

- Post Oracle ACE Dinner drinks...wherever the night takes me.

- Monday

- Oracle OpenWorld - San Francisco Bay Swim - Part II, 7:30 AM. We had almost 20 last year, 33 have signed up (on the page anyway) this year. Come along. Cool t-shirts too, sponsored by Oracle Technology Network and designed by Lauren Prezby.

Let's not forget the swim caps! Sponsored by the encouragable Bjoern Rost of The portrix group (he's like me, afraid of capital letters) (designed by Lauren Prezby).

- Oaktable World. You'll most likely catch me here after the swim and before the...

- Wear Your ORACLENERD Gear Day, 3 PM to 4:30 PM. We'll be taking a group photo around 4:30 PM. If you can't make it for that, come by when you can and get a picture with me. You know I like that sh...stuff. You don't have a shirt/hat/sticker/random-item? OTN Lounge is giving away one hundred cool red t-shirts (Lauren Prezby, again).

This picture is coinciding with the APEX Developer Challenge which goes from 3 - 7:30 that afternoon/evening. I might even give it a go (fueled, hopefully, by Pliny the Elder).

- Oracle OpenWorld - San Francisco Bay Swim - Part II, 7:30 AM. We had almost 20 last year, 33 have signed up (on the page anyway) this year. Come along. Cool t-shirts too, sponsored by Oracle Technology Network and designed by Lauren Prezby.

- Tuesday

- Oaktable World.

- Leave at noon.

Monday, August 26, 2013

DBA or Developer?

I've always considered myself a developer and a LOWER(DBA). I may have recovered perhaps one database and that was just a sandbox, nothing production worthy. I've built out instances for development and testing and I've installed the software a few hundred times, at least. I've done DBA-like duties, but I just don't think of myself that way. I'm a power developer maybe? Whatevs.

I'm sure it would be nearly impossible to come up with One True Definition of The DBA ™. So I won't.

I've read that Tom Kyte does not consider himself a DBA, but I'm not sure most people know that. From Mr. Kyte himself:

At the same conference, I asked Cary Millsap the same question:

I read Cary for years and always assumed he was a DBA. I mean, have you read his papers? Have you read Optimizing Oracle Performance? Performance? That's what DBAs do (or so I used to think)!

It was only after working with him at #kscope11 on the Building Better Software track that I learned otherwise.

Perhaps I'll make this a standard interview question in the future...

Semi-related discussions:

1. Application Developers vs. Database Developers

2. Application Developers vs. Database Developers: Part II

I'm sure it would be nearly impossible to come up with One True Definition of The DBA ™. So I won't.

I've read that Tom Kyte does not consider himself a DBA, but I'm not sure most people know that. From Mr. Kyte himself:

At the same conference, I asked Cary Millsap the same question:

I read Cary for years and always assumed he was a DBA. I mean, have you read his papers? Have you read Optimizing Oracle Performance? Performance? That's what DBAs do (or so I used to think)!

It was only after working with him at #kscope11 on the Building Better Software track that I learned otherwise.

Perhaps I'll make this a standard interview question in the future...

Semi-related discussions:

1. Application Developers vs. Database Developers

2. Application Developers vs. Database Developers: Part II

Tuesday, August 13, 2013

Conditional Formatting of Calculated Items in OBIEE 11g

By Victor Fagundo

As people searched for a work around to this problem 3 common solutions have arisen:

Now with 11g providing conditional formatting that allows you to override the default data format, this is possible via the following steps:

* Note that this would also allow you to apply visual formatting if you wanted to distinguish this row/column as a total.

If you try to setup a filter that is true when the dimension is not in reasonable range of values ( in this example we try to format off all years not in the 2000s ) you will find that your calculated item is skipped as well (this has the added vulnerability of being very explicit):

The reason for all this is that the calculated item “borrows” EACH of the dimension values it operates against. Hence, no matter how inventive your filter is, as long as you are trying to somehow separate the calculated member away from the members it is operating on, you will never succeed. This member “borrowing” is apparent if you add the dimension it operates against to the query a 2nd time, and look at the table view.

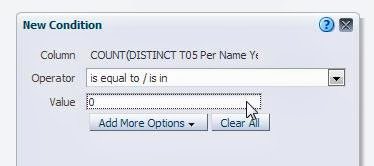

But since the “member value” given to the calculated item does not actually exist in the dimension, if you try to perform a count distinct against it, you will always get zero.

There is your difference; there is your “trigger.” The rest is basic formatting.

1: You might suggest that this requirement is better served using column(s) with time series calculations, and you might be right. However, more often than not the user will want to SEE the time periods being compared ( 2012 vs 2011, or 08/07/2012 vs 08/07/2011). When using facts with time series calculations you will only be able to show “this year” vs “last year” since the column heading of the time series calculated fact will always be static. In these cases you will need to use the base fact and a time dimension, along with the solution provided here.

2: All screen shots, and examples used in this post are performed in Sample App V305. An XML of the final correctly formatted report can be downloaded here.

Calculated items in OBIEE Pivot tables can be very useful in certain reporting circumstances, either for ease of development, or to meet specific report requirements. While calculated items in OBIEE are easy, and flexible, they do have one important drawback: they take on the data and display formatting of the fact column they are calculated against.

The most common case is the calculation of a % change across a time dimension in financial reporting ( Year over Year, Quarter over Quarter, etc.). 1 This type of calculation usually takes the form of a percent change calculation similar to below:

(( $2 - $1 ) / $1) *100

By default, if you perform this calculation against a numerical fact ( sales, customers) you will run into the problem of how to display the % change in the correct format, since the calculated % will want to take the form of the fact it is calculated against, as can be seen in the example 2 below:

|

| Figure 1 - Pivot Table |

|

| Figure 2 - Calculated item |

|

Figure 3 - Results

|

Not very pretty at all.

- Use HTML formatting tricks to “hide” trigger text in the results, then conditionally format off those triggers. While inventive, as the comments note, this solution falls flat if the report is ever printed, as the PDF engine will pick up and display all of the hidden characters.

- Convert the pivot table to a regular table with some complex column formulas. Very time consuming and cumbersome, would also not solve the requirement of showing the dimension values noted as noted in Footnote1.

- Convert the calculated result to text and manually add your formatting characters. I don’t think this actually works since the calculated fields won’t accept logical SQL functions, and this would be very cumbersome.

- Add a column that is a COUNT DISTINCT on the dimension that you are calculating across ( in the displayed example, “Time T05 Per Name Year”. This column will serve as your “trigger” to apply your conditional formatting.

Figure 4 - Column Formula

Figure 4 - Column Formula

- For each of your facts, apply a conditional format that is triggered when the above column value is zero. In the formatting, apply whatever visual and data formats you desire. In this example we will format the data as a percent, with one decimal place.

Figure 5 - Condition

Figure 6 - Format when condition is met

- Exclude the “trigger” column from your pivot view. View your results and be satisfied:

Figure 7 - Correct formatting of calculated item.

* Note that this would also allow you to apply visual formatting if you wanted to distinguish this row/column as a total.

Why it works

The use of conditional formatting that applies a data type as part of the format is a straightforward leap of logic, but what to use as the trigger? Most people will try to use the dimension they have setup the calculation in. However, if you try to use the text description given to the calculated item you will find that the condition is never applied: |

| Figure 8 - Condition on dimension |

|

| Figure 9 - Condition never met, format never applied |

If you try to setup a filter that is true when the dimension is not in reasonable range of values ( in this example we try to format off all years not in the 2000s ) you will find that your calculated item is skipped as well (this has the added vulnerability of being very explicit):

|

| Figure 10 - Condition on dimension values |

|

| Figure 11- Condition never met, format never applied |

The reason for all this is that the calculated item “borrows” EACH of the dimension values it operates against. Hence, no matter how inventive your filter is, as long as you are trying to somehow separate the calculated member away from the members it is operating on, you will never succeed. This member “borrowing” is apparent if you add the dimension it operates against to the query a 2nd time, and look at the table view.

|

| Figure 12 - Calculated item "borrows" members |

But since the “member value” given to the calculated item does not actually exist in the dimension, if you try to perform a count distinct against it, you will always get zero.

|

| Figure 13 - Count distinct against dimension |

There is your difference; there is your “trigger.” The rest is basic formatting.

1: You might suggest that this requirement is better served using column(s) with time series calculations, and you might be right. However, more often than not the user will want to SEE the time periods being compared ( 2012 vs 2011, or 08/07/2012 vs 08/07/2011). When using facts with time series calculations you will only be able to show “this year” vs “last year” since the column heading of the time series calculated fact will always be static. In these cases you will need to use the base fact and a time dimension, along with the solution provided here.

2: All screen shots, and examples used in this post are performed in Sample App V305. An XML of the final correctly formatted report can be downloaded here.

Tuesday, July 30, 2013

Learn To ______ In A Year

It started at The Talent Code blog by Daniel Coyle a few weeks back, What's Your LQ (Learning Quotient)?. That led me to Diamondbacks’ Goldschmidt Has Little Ego and Few Limits. I like baseball stories. I especially like this passage:

I identify with that. I believe part of my success is because I ask questions.

Back to the original article. Then I end up here, Can Everyone Be Smart at Everything? I seem to lack the ability to focus for extended periods of time. Well, not quite true. I have the ability to focus, but I like to focus on a million different things. Does that count? I don't know.

I'm often envious of my friends who have been DBAs for 20 years, or worked with OBIEE for 10 years (don't argue with me...I know Oracle hasn't owned it for 10 years, I'm looking at you Christian), or APEX for 10 years (that's safe to say). I've flirted with all of those, but I've never committed...See how I get distracted easily? Wow.

and then...

OMFG. Focus!

Back to the original article and I'm reading through the comments. Someone links up to this young lady who taught herself how to dance in a year. Watch it.

Which finally brings me back to The Talent Code, To Improve Faster, Think Like a Startup. Staying with me? How about this?

Finally, there's a point. I want to do this. Maybe not dance (as much fun as that may be), but something else. Krav Maga? Algebra? Calculus (I'm pursuing my physics or engineering degree in 2035, I need to study my math). I want to test out her technique. Small, discrete steps practiced daily towards some end goal (pass a calc test, take a real estate licensing test, whatever). The problem for me, if you haven't noticed, is focus. This method may help.

If you were to try something like this, what would you set out to learn?

“A lot of kids have so much pride that they want to show the coaches and the front office that they know what they’re doing, and they don’t need the help,” Zinter said. “They don’t absorb the information because they want us to think they know it already. Goldy didn’t have an ego. He didn’t have that illusion of knowledge. He’s O.K. with wanting to learn.”

I identify with that. I believe part of my success is because I ask questions.

Back to the original article. Then I end up here, Can Everyone Be Smart at Everything? I seem to lack the ability to focus for extended periods of time. Well, not quite true. I have the ability to focus, but I like to focus on a million different things. Does that count? I don't know.

I'm often envious of my friends who have been DBAs for 20 years, or worked with OBIEE for 10 years (don't argue with me...I know Oracle hasn't owned it for 10 years, I'm looking at you Christian), or APEX for 10 years (that's safe to say). I've flirted with all of those, but I've never committed...See how I get distracted easily? Wow.

And just as importantly, that mistakes are part of good learning. As a Wired article recently reported about why some are more effective at learning from mistakes, “the important part is what happens next.” People with a “growth mindset” — those who “believe that we can get better at almost anything, provided we invest the necessary time and energy” — were significantly better at learning from their mistakes.

and then...

“The meaning of difficulty changes. Difficulty means trying harder, trying a different strategy. They understand that change is possible, and progress occurs over time.”

OMFG. Focus!

Back to the original article and I'm reading through the comments. Someone links up to this young lady who taught herself how to dance in a year. Watch it.

Which finally brings me back to The Talent Code, To Improve Faster, Think Like a Startup. Staying with me? How about this?

Finally, there's a point. I want to do this. Maybe not dance (as much fun as that may be), but something else. Krav Maga? Algebra? Calculus (I'm pursuing my physics or engineering degree in 2035, I need to study my math). I want to test out her technique. Small, discrete steps practiced daily towards some end goal (pass a calc test, take a real estate licensing test, whatever). The problem for me, if you haven't noticed, is focus. This method may help.

If you were to try something like this, what would you set out to learn?

Monday, July 8, 2013

Write It Out

This one was sitting in the drafts folder for a week or two, then I saw this post on Twitter:

Years ago I had a boss who was my technical superior (he may still be). I used to pop in and out of his office, or try to, and ask questions. Most of them were silly, n00b questions.

He was nice, but busy. It didn't take me very long to "read" that. So I slowed down my pace of questions. I began to write things up via email so that he could respond when he the had time. I started to use forums as well. Then Ifound was directed to How To Ask Questions The Smart Way.

One of the things that became evident quickly is that I didn't always have to hit Send (email) or Submit (forum post), just the act of writing it out forced me to think through the issue and more often than not, I would figure out the answer on my own.

Flash forward five or six years and I started to receive all these questions, either in person or via chat. "Send me an email" was usually my response, especially if I was in the middle of something (see: Context Switching). I was happy to help, just not at that moment. With email, I could get to it when I got a break (or needed one).

One of my favorite people, Jason Baer, who has worked for RittmanMead for the last couple of years, took this to heart. We started working together in December of 2009 and he would pepper me with questions constantly. I could never keep up. "Email the question Jason."

I didn't realize it, but I started getting fewer and fewer emails/questions from him. He began to figure them out on his own. It seemed most of the time he had just missed something, other times he just figured out another way to do something.

Jason is a smart guy. I think I'm smart. Sometimes it's just easier to ask the question without thinking it through. In fact, I do that quite a bit on The Twitter Machine ™, especially those errors that I seem to know but just don't have the bandwidth to research (think DBA type questions). I believe the types of questions thatshould must be written down are those that deal with Approach (design, architecture, etc). Any of those ORA errors better come along with a link to the error code in the documentation and some proof that you've researched it a bit yourself...but then that's getting into How To Ask Questions The Smart Way.

Go out and practice. Next time you have a (technical) question for someone, anyone, write it down and see what happens.

I wonder what percentage of people ask a question and figure it out on their own before you help them. What about in your experience?

— Amy Caldwell (@amyccaldwell) July 8, 2013

Years ago I had a boss who was my technical superior (he may still be). I used to pop in and out of his office, or try to, and ask questions. Most of them were silly, n00b questions.

He was nice, but busy. It didn't take me very long to "read" that. So I slowed down my pace of questions. I began to write things up via email so that he could respond when he the had time. I started to use forums as well. Then I

One of the things that became evident quickly is that I didn't always have to hit Send (email) or Submit (forum post), just the act of writing it out forced me to think through the issue and more often than not, I would figure out the answer on my own.

Flash forward five or six years and I started to receive all these questions, either in person or via chat. "Send me an email" was usually my response, especially if I was in the middle of something (see: Context Switching). I was happy to help, just not at that moment. With email, I could get to it when I got a break (or needed one).

One of my favorite people, Jason Baer, who has worked for RittmanMead for the last couple of years, took this to heart. We started working together in December of 2009 and he would pepper me with questions constantly. I could never keep up. "Email the question Jason."

I didn't realize it, but I started getting fewer and fewer emails/questions from him. He began to figure them out on his own. It seemed most of the time he had just missed something, other times he just figured out another way to do something.

Jason is a smart guy. I think I'm smart. Sometimes it's just easier to ask the question without thinking it through. In fact, I do that quite a bit on The Twitter Machine ™, especially those errors that I seem to know but just don't have the bandwidth to research (think DBA type questions). I believe the types of questions that

Go out and practice. Next time you have a (technical) question for someone, anyone, write it down and see what happens.

Monday, July 1, 2013

Context Switching: An Example

Last week at #kscope13 I saw an outstanding example of context switching. If you don't know what it is, Tom Kyte explains it here.

The session was Using Kanban and Scrum to Increase Your Development Throughput presented by Stew Stryker (not to be confused with Ted Striker) of Dartmouth College (Stew gave me a gallon of Vermont Maple Syrup which exploded in my bag on the flight home, a gift for sharing my hotel room. Thanks Stew! ;)). So here's the example he gave to demonstrate context switching.

Take a list of names and time yourself writing out the first letter of each name, then the second, until you are finished.

Now, same list of names and write them out the way you normally would, left to right.

If the first method was faster, you are a freak of nature.

The two environments are just "different", separate and distinct. You can do plsql without SQL, you can do SQL (and many times do) without invoking plsql. There is a call

overhead to go from SQL to PLSQL (the "hit" is most evident when SQL invokes PLSQL - not so much the other way, when SQL is embedded in PLSQL). Even if this hit is very very small (say 1/1000th of a second) - if you do it enough, it adds up. So, if it can be avoided - it should be.

The session was Using Kanban and Scrum to Increase Your Development Throughput presented by Stew Stryker (not to be confused with Ted Striker) of Dartmouth College (Stew gave me a gallon of Vermont Maple Syrup which exploded in my bag on the flight home, a gift for sharing my hotel room. Thanks Stew! ;)). So here's the example he gave to demonstrate context switching.

Take a list of names and time yourself writing out the first letter of each name, then the second, until you are finished.

Now, same list of names and write them out the way you normally would, left to right.

If the first method was faster, you are a freak of nature.

Wednesday, June 12, 2013

Required Reading

It's not often that I run across articles that really resonate with me. Last night was one of those rare occasions. What follows is a sampling of what I consider to be required reading for any IT professional with a slant towards database development.

That led me to Bad CaRMa by Tim Gorman. This was an entry in Oracle Insights: Tales of the Oak Table, which I have not read, yet.

A snippet:

This is from 2006 (the book was published in 2004). Not sure how I missed that story, but I did.

Read it. Remember it.

I don't believe this is the one that I would read just about every day during my first few years working with Oracle, but it's representative (I'll link up the original when I find it). I cut my teeth in the Oracle world by reading AskTom every single day for years. Some of my work at the time included working with java server pages (jsp) - at least until I found APEX. I monkeyed around with BC4J for awhile as well, but I believe these types of threads on AskTom kept me from going off the cliff. In fact, I got to a point where I would go to an interview and then debate the interviewer about this same topic. Fun times.

I'll continue to add to this list as time goes on. If you have any suggestions, leave a comment and I'll add them to the list.

- Bad CaRMa

- Big Ball of Mud

- How To Ask Questions The Smart Way

- Business Logic - PL/SQL Vs Java - Reg

- Thinking Clearly About Performance

- The Complicator's Gloves

Bad CaRMa

how NOT to design a database schema - super classic article. Every data architect should read this ! simple-talk.com/opinion/opinio… @timothyjgorman

— Kyle Hailey (@dboptimizer) June 11, 2013

That led me to Bad CaRMa by Tim Gorman. This was an entry in Oracle Insights: Tales of the Oak Table, which I have not read, yet.

A snippet:

...The basic premise was that just about all of the features of the relational database were eschewed, and instead it was used like a filing system for great big plastic bags of data. Why bother with other containers for the data—just jam it into a generic black plastic garbage bag. If all of those bags full of different types of data all look the same and are heaped into the same pile, don't worry! We'll be able to differentiate the data after we pull it off the big pile and look inside.

Amazingly, Randy and his crew thought this was incredibly clever. Database engineer after database engineer were struck dumb by the realization of what Vision was doing, but the builders of the one-table database were blissfully aware that they were ushering in a new dawn in database design...

Amazingly, Randy and his crew thought this was incredibly clever. Database engineer after database engineer were struck dumb by the realization of what Vision was doing, but the builders of the one-table database were blissfully aware that they were ushering in a new dawn in database design...

This is from 2006 (the book was published in 2004). Not sure how I missed that story, but I did.

Big Ball of Mud

I've read this one, and sent it out, many times over the years. I can't remember when I first encountered it, but I read this once every couple of months. I send it out to colleagues about as often. You can find the article here.A BIG BALL OF MUD is haphazardly structured, sprawling, sloppy, duct-tape and bailing wire, spaghetti code jungle. We’ve all seen them. These systems show unmistakable signs of unregulated growth, and repeated, expedient repair. Information is shared promiscuously among distant elements of the system, often to the point where nearly all the important information becomes global or duplicated. The overall structure of the system may never have been well defined. If it was, it may have eroded beyond recognition. Programmers with a shred of architectural sensibility shun these quagmires. Only those who are unconcerned about architecture, and, perhaps, are comfortable with the inertia of the day-to-day chore of patching the holes in these failing dikes, are content to work on such systems.

Read it. Remember it.

How To Ask Questions The Smart Way

Ever been in a forum? Has anyone ever given you the "RTFM" answer? Here's how you can avoid it. How To Ask Questions The Smart Way. I read this originally about 9 or 10 years ago. I've sent it out countless times.The first thing to understand is that hackers actually like hard problems and good, thought-provoking questions about them. If we didn't, we wouldn't be here. If you give us an interesting question to chew on we'll be grateful to you; good questions are a stimulus and a gift. Good questions help us develop our understanding, and often reveal problems we might not have noticed or thought about otherwise. Among hackers, “Good question!” is a strong and sincere compliment.

Despite this, hackers have a reputation for meeting simple questions with what looks like hostility or arrogance. It sometimes looks like we're reflexively rude to newbies and the ignorant. But this isn't really true.

What we are, unapologetically, is hostile to people who seem to be unwilling to think or to do their own homework before asking questions. People like that are time sinks — they take without giving back, and they waste time we could have spent on another question more interesting and another person more worthy of an answer. We call people like this “losers” (and for historical reasons we sometimes spell it “lusers”).

Despite this, hackers have a reputation for meeting simple questions with what looks like hostility or arrogance. It sometimes looks like we're reflexively rude to newbies and the ignorant. But this isn't really true.

What we are, unapologetically, is hostile to people who seem to be unwilling to think or to do their own homework before asking questions. People like that are time sinks — they take without giving back, and they waste time we could have spent on another question more interesting and another person more worthy of an answer. We call people like this “losers” (and for historical reasons we sometimes spell it “lusers”).

Business Logic - PL/SQL Vs Java - Reg

The article can be found here.I don't believe this is the one that I would read just about every day during my first few years working with Oracle, but it's representative (I'll link up the original when I find it). I cut my teeth in the Oracle world by reading AskTom every single day for years. Some of my work at the time included working with java server pages (jsp) - at least until I found APEX. I monkeyed around with BC4J for awhile as well, but I believe these types of threads on AskTom kept me from going off the cliff. In fact, I got to a point where I would go to an interview and then debate the interviewer about this same topic. Fun times.

if it touches data -- plsql.

If it is computing a fourier transformation -- java.

If it is processing data -- plsql.

If it is generating a graph -- java.

If it is doing a transaction of any size, shape or form against data -- plsql.

If it is computing a fourier transformation -- java.

If it is processing data -- plsql.

If it is generating a graph -- java.

If it is doing a transaction of any size, shape or form against data -- plsql.

Thinking Clearly About Performance

Cary Millsap. Most of the people seem to know Cary from Optimizing Oracle Performance, I didn't. I first "met" Cary virtually and he was gracious enough to help me understand my questions around Logging, Debugging, Instrumentation and Profiling. Anyway, what I've learned over that time, is that Cary doesn't think of himself as a DBA, he's a Developer. That was shocking for me to hear...I wonder how many others know that. So I've read this paper about 20 times over the last couple of years (mostly because I'm a little slow). I organize events around this topic (instrumentation, writing better software, etc) and this fits in perfectly. My goal is to one day co-present with Cary, while playing catch, on this topic (I don't think he knows that, so don't tell him). Link to his paper can be found here. Enjoy!The Complicator's Gloves

One of my favorite articles from The Daily WTF of all time. Find the article here. The gist of the story is this: an internal forum where people were discussing how to warm a given individuals hands on his bike ride to work. The engineers then proceeded to come up with all kinds of solutions...they spent all day doing this. Finally, someone posts, "wear gloves." End of discussion. Love it. I wrote about it years ago in Keeping it Simple. For a few years I considered buying up thecomplicatorsgloves.com and try to gather related stories, but I got lazy. You should read this often, or better, send it out to colleagues on a regular basis to remind them of their craziness.I'll continue to add to this list as time goes on. If you have any suggestions, leave a comment and I'll add them to the list.

Thursday, April 18, 2013

caveats

I always find myself putting an asterisk (if only mentally) next to certain statements. I shall now put all those statements here and link back.

- I don't know everything

- I'm not the best developer in the world, but I constantly work at getting better...

- If I make a statement about something, that's been my experience. Your results may vary.

- I am not a salesman.

- I do not work for <insert company name which I just pitched here>

Saturday, April 6, 2013

Ubuntu 12.10 + nvidia

I updated my host OS a few months back after getting repeated notifications (yes, I know, I can shut them off) that 10.04 (I think) was moving out of support.

Since then, I've had an issue with my Nvidia drivers. Basically, I get video on a single monitor (dual set up) and that single monitor resolution is like 200 x 400 (no, it's not really that, but it is gigantic). Thank goodness for The Google Machine™. That originally led me here on StackOverflow. (Another reason to do things from the command line, you can remember things with history | grep nvidia).

I'm on the 4th time of going through this exercise. Each time the kernel is updated, nvidia breaks. Fortunately for me, that guy on StackOverflow gave me all the information I needed. This time after reboot and the gigantic screen, I removed the nvidia drivers and then reinstalled them. No go. uname -r gave me the following: 3.5.0-26-generic and dpkg -l|grep headers showed an older version of the kernel headers. So I updated those, reinstalled nvidia-current and rebooted. Yay.

Many "small" issues like this recently have me pondering a move back to, gasp, Windows or perhaps even a Mac. The Mac ecosystem scares me because it is expensive...but it's difficult to square when so many of my friends (technical and otherwise) swear by Macs. Something for another day I guess...

Since then, I've had an issue with my Nvidia drivers. Basically, I get video on a single monitor (dual set up) and that single monitor resolution is like 200 x 400 (no, it's not really that, but it is gigantic). Thank goodness for The Google Machine™. That originally led me here on StackOverflow. (Another reason to do things from the command line, you can remember things with history | grep nvidia).

I'm on the 4th time of going through this exercise. Each time the kernel is updated, nvidia breaks. Fortunately for me, that guy on StackOverflow gave me all the information I needed. This time after reboot and the gigantic screen, I removed the nvidia drivers and then reinstalled them. No go. uname -r gave me the following: 3.5.0-26-generic and dpkg -l|grep headers showed an older version of the kernel headers. So I updated those, reinstalled nvidia-current and rebooted. Yay.

Many "small" issues like this recently have me pondering a move back to, gasp, Windows or perhaps even a Mac. The Mac ecosystem scares me because it is expensive...but it's difficult to square when so many of my friends (technical and otherwise) swear by Macs. Something for another day I guess...

Thursday, April 4, 2013

Fun with CHAR

I'm busy deriving file layouts from PL/SQL. Probably close to 100 file definitions...each of them slightly different, each of them defined in the code. Fun!

There are a mixture of types too, fixed width, csv, etc. Thankfully, I've read enough of the code now that it's relatively easy to figure out. The fixed width variety is what this is about though.

In much of the code, there's a type that's defined, something like this:

Then I started to think about it...it's a CHAR. CHAR is already fixed width. To wit:

So what's the purpose of those RPAD( ' ', 10 ) calls? I'm not sure.

The only reason I even began to think about it was that I ran across one type set up with VARCHAR data types. There it makes sense, using RPAD I mean. With the CHAR field, it's a waste of typing IMO. Perhaps it was just for readability...who knows?

There are a mixture of types too, fixed width, csv, etc. Thankfully, I've read enough of the code now that it's relatively easy to figure out. The fixed width variety is what this is about though.

In much of the code, there's a type that's defined, something like this:

type my_record is record ( column_01 CHAR(10), column_02 CHAR(10), column_03 CHAR(10) );That's then used to receive assignments from incoming variables. I'll hardcode my variables for this exercise.

declare

type my_record is record

(

column_01 CHAR(10),

column_02 CHAR(10),

column_03 CHAR(10)

);

l_rec my_record;

begin

l_rec.column_01 := '1';

l_rec.column_02 := '3';

l_rec.column_03 := '6';

end;Littered throughout those assignments though, are things like LPAD and RPAD. You're going to say, "well, yeah, if it's a number, you may want it right aligned or something." Fair enough. But I'm not talking about those, I'm talking about this:l_rec.column_01 := rpad( ' ', 10 ); l_rec.column_02 := '3'; l_rec.column_03 := RPAD( ' ', 10 );Ostensibly, these columns once held data. Instead of forcing the client (application, business, whatever) to change their processing bit, the file was left the same. Makes sense.

Then I started to think about it...it's a CHAR. CHAR is already fixed width. To wit:

drop table t purge;

create table t

(

x CHAR(10)

);

insert into t ( x ) values ( ' ' );

insert into t ( x ) values ( null );

select

rownum,

length( x ),

x

from t;

ROWNUM LENGTH(X) X

---------- ---------- ----------

1 10

2

I inserted a single space in the first record. It has a length of 10 despite only inserting a single character there.

So what's the purpose of those RPAD( ' ', 10 ) calls? I'm not sure.

The only reason I even began to think about it was that I ran across one type set up with VARCHAR data types. There it makes sense, using RPAD I mean. With the CHAR field, it's a waste of typing IMO. Perhaps it was just for readability...who knows?

Tuesday, March 26, 2013

On Work/Life Balance

Nolan Bushnell, founder of Atari, had this to say via his new book, Find the Next Steve Jobs.

I've struggled with the work/balance thing. I'm better now than I was a year ago, but it takes a lot of work. That quote definitely illustrates one aspect of how it could get so out of balance...I thoroughly enjoy what I do.

(It’s been said that many people in high tech cannot balance their personal and work lives. Here’s another way to look at it: Their jobs are so interesting that it’s difficult to figure out what is work and what is play. Creative projects produce this kind of excitement.)

I've struggled with the work/balance thing. I'm better now than I was a year ago, but it takes a lot of work. That quote definitely illustrates one aspect of how it could get so out of balance...I thoroughly enjoy what I do.

Monday, March 25, 2013

Analysis Tools...

I've taken on an effort to port a custom data integration (PL/SQL, Java, etc) application.

In that regard, I'm doing a fair amount of analysis right now. So I need help finding two tools:

1. A tool that will allow me to map (visually or otherwise) a single data point from source to target(s). I typically use Excel. It's easy to use and available everywhere. Where it falls apart, slightly, is that a single data point may have one or more middle steps (i.e. not target) and one or more targets. I think I want something like this:

Keep in mind though, I have potentially hundreds of columns in a system with thousands upon thousands of...

A couple of people have suggested using an ETL tool like Informatica, Pentaho or ODI. Yes. But I don't see it yet. Besides, I don't want to map to actually do something...most of the conversion has already been done and I'm picking it up at a particular step (near the beginning). What's missing is that mapping document that I want to create for everything...but that's another story.

2. I want to to look at a view and know where those stupid unaliased columns are sourced from. A very, very basic example:

Anyway, it doesn't take me very long to figure where those columns are sourced from. What if there are 10's of those in a view with 100's of columns? Yes, not enjoyable. What if there are many views just like this that you have to analyze? Yes!

Data Dictionary?!

Not yet. DBA_TAB_COLUMNS? Nope. Come on! It's got to be there somewhere...when you compile a view Oracle checks to make sure everything is a-ok right? Doesn't it store that information somewhere? It must!. I took to Twitter, naturally, and Steve Karam, aka @OracleAlchemist found this possible gem:

I'm also requesting a feature in SQL Developer...or, trying to anyway. Back channels of course.

I've done this kind of analysis in the past, but it is usually a one off, so there never seemed to be a need to make it repeatable. Now, there is a need. A giant need. If you've got any ideas for me, let me know...

In that regard, I'm doing a fair amount of analysis right now. So I need help finding two tools:

1. A tool that will allow me to map (visually or otherwise) a single data point from source to target(s). I typically use Excel. It's easy to use and available everywhere. Where it falls apart, slightly, is that a single data point may have one or more middle steps (i.e. not target) and one or more targets. I think I want something like this:

Keep in mind though, I have potentially hundreds of columns in a system with thousands upon thousands of...

A couple of people have suggested using an ETL tool like Informatica, Pentaho or ODI. Yes. But I don't see it yet. Besides, I don't want to map to actually do something...most of the conversion has already been done and I'm picking it up at a particular step (near the beginning). What's missing is that mapping document that I want to create for everything...but that's another story.

2. I want to to look at a view and know where those stupid unaliased columns are sourced from. A very, very basic example:

SELECT hs.column_1, hs.column_2, add.address_line_1, var_value_01, var_value_02 FROM big_table hs, address_table add, other_random_table ran WHERE hs.address_id = add.address_id AND hs.random_id = ran.random_idVAR_VALUE_01 and VAR_VALUE_02, why don't you have aliases? Why did your developer neglect you so? Why can't every single developer just remember that someone, someday, will have to look at their code? Please? Pretty please? Or did you know it would be me and thus you did it on purpose? If so, I'm not talking to you again.

Anyway, it doesn't take me very long to figure where those columns are sourced from. What if there are 10's of those in a view with 100's of columns? Yes, not enjoyable. What if there are many views just like this that you have to analyze? Yes!

Data Dictionary?!

Not yet. DBA_TAB_COLUMNS? Nope. Come on! It's got to be there somewhere...when you compile a view Oracle checks to make sure everything is a-ok right? Doesn't it store that information somewhere? It must!. I took to Twitter, naturally, and Steve Karam, aka @OracleAlchemist found this possible gem:

@oraclenerd @thatjeffsmith @eaviles94 Check this out Chet. rwijk.blogspot.com/2008/10/dbadep…

— Steve Karam (@OracleAlchemist) March 26, 2013

I'm also requesting a feature in SQL Developer...or, trying to anyway. Back channels of course.

I've done this kind of analysis in the past, but it is usually a one off, so there never seemed to be a need to make it repeatable. Now, there is a need. A giant need. If you've got any ideas for me, let me know...

Wednesday, March 20, 2013

The Internet

Have you seen this State Farm ad?

I think it's hilarious.

Riding to batting practice with LC, he starts up with me...

LC: (in response to some statement I made) "Where'd you hear that?"

Me: "The Internet"

LC: "And you believed it?"

Me: "Yeah, they can't put anything on the internet that isn't true."

LC: "Where'd you hear that?"

Together: "The Internet"

We also do the "And then...?" skit from Dude, Where's My Car?. He used to be able to rattle off the saying from Tommy Boy, "You can get a good look at a t-bone by sticking your head up a bull's..." - I'm pretty sure this is better than that.

I think it's hilarious.

Riding to batting practice with LC, he starts up with me...

LC: (in response to some statement I made) "Where'd you hear that?"

Me: "The Internet"

LC: "And you believed it?"

Me: "Yeah, they can't put anything on the internet that isn't true."

LC: "Where'd you hear that?"

Together: "The Internet"

We also do the "And then...?" skit from Dude, Where's My Car?. He used to be able to rattle off the saying from Tommy Boy, "You can get a good look at a t-bone by sticking your head up a bull's..." - I'm pretty sure this is better than that.

Tuesday, March 19, 2013

dbms_output.put_line

I've been scratching my eyes out lately trying to reverse engineer some lots of PL/SQL.

One thing I've seen a lot of is calls to dbms_output.put_line. Fortunately, I've seen some dbms_application_info.set_module and other system calls too. But back to that first one.

1. When I used dbms_output, I would typically only use it in development. Once done, I would remove all calls to it, test and promote to QA. It would never survive the trip to production.

2. Typically, when I used it in development, I would tire of typing out d b m s _ o u t p u t . p u t _ l i n e so I would either a, create a standalone procedure or create a private procedure inside the package, something like this (standalone version).

One thing I've seen a lot of is calls to dbms_output.put_line. Fortunately, I've seen some dbms_application_info.set_module and other system calls too. But back to that first one.

1. When I used dbms_output, I would typically only use it in development. Once done, I would remove all calls to it, test and promote to QA. It would never survive the trip to production.

2. Typically, when I used it in development, I would tire of typing out d b m s _ o u t p u t . p u t _ l i n e so I would either a, create a standalone procedure or create a private procedure inside the package, something like this (standalone version).

CREATE OR REPLACE PROCEDURE p( p_text IN VARCHAR2 ) IS BEGIN dbms_output.put_line( p_text ); END p;Easy. Then, in the code, I would simply use the procedure p all over the place...like this:

l_start_time date; l_end_time date; begin l_start_time := sysdate; p( 'l_start_time: ' || l_start_time ); --do some stuff here --maybe add some more calls to p l_end_time := sysdate; p( 'l_end_time: ' || l_start_time ); end;Since the procedure is 84 characters long, I only have to use the p function 4 times to get the benefit. Yay for me...I think. Wait, I like typing.

Tuesday, March 12, 2013

#kscope13

Back in September, I was asked, and agreed, to become to Content Chair for "The Traditional" track at Kscope 13. Like I mentioned there, I had been involved for the past couple of years and it seemed like a natural fit. Plus, I get to play with some really fun people. If you are ready to take advantage of Early Bird Registration, go here. (save $300)

Over the past few weeks we've finalized (mostly) the Sunday Symposium schedule. We're currently working on finalizing Hands-on-Labs (HOL).

Beginning last year, we've had the Oracle product teams running the Sunday Symposia. This gives them an opportunity to showcase their wares and (hopefully) provide a bit of a road map for the future of said wares. This year, we have three symposia: APEX, ADF and Fusion Development and The Database and Developer's Toolbox.

ADF and Fusion Development

- Oracle Development Tools – Where are We and What’s Next - Bill Patakay, Oracle

- How to Get Started with Oracle ADF – What Resources are Out There? - Shay Shmeltzer and Lynn Munsinger, Oracle

- The Cloud and What it Means to Oracle ADF and Java Developers - Dana Singleterry, Oracle

- Going Mobile – What to Consider Before Starting a Mobile Project - Joe Huang, Oracle

- Understanding Fusion Middleware and ADF Integration - Frederic Desbiens, Lynn Munsinger, and Shay Shmeltzer, Oracle

- Open Q&A with the ADF Product Management

I love that they are opening up the floor to questions from their users. I wish more product teams would do that.

Application Express

- Oracle Database Tools - Mike Hichwa, Oracle

- Technology for the Database Cloud - Rick Greenwald, Oracle

- Developing Great User Interfaces with Application Express - Shakeeb Rahman, Oracle

- How Do We Build the APEX Builder? - Vlad Uvarov, Oracle

- How to Fully Utilize RESTful Web Services with Application Express - John Snyders, Oracle

- Update from APEX Development - Joel Kallman, Oracle

(If you see Joel Kallman out and about, make sure you you mispronounce APEX). This is a fantastic group of people (minus Joel of course). Not mentioned above is the affable David Peake who helps put all this together. The community surrounding APEX is second-to-none.

Finally, The Database and Developer's Toolkit. I'm partial to this one because I've been involved in the database track for the past couple of years. Like last year, this one is being put together by Kris Rice of Oracle. There are no session or abstract details for this one as it will be based mainly on the upcoming 12c release of the database. However, we do have the list of speakers lined up. If you could only come for one day of this conference, Sunday would be the day and this symposium would be the one you would attend.

This symposium will start off with Mike Hichwa (above) and then transition to the aforementioned (too many big words tonight) Mr. Rice. He'll be accompanied by Jeff Smith of SQL Developer fame, Maria Colgan from the Optimzer team and Tom Kyte.

How'd we do? I think pretty darn good.

Don't forget to sign up. Early Bird Registration ends on March 25, 2013. Save $300.

Over the past few weeks we've finalized (mostly) the Sunday Symposium schedule. We're currently working on finalizing Hands-on-Labs (HOL).

Beginning last year, we've had the Oracle product teams running the Sunday Symposia. This gives them an opportunity to showcase their wares and (hopefully) provide a bit of a road map for the future of said wares. This year, we have three symposia: APEX, ADF and Fusion Development and The Database and Developer's Toolbox.

ADF and Fusion Development

- Oracle Development Tools – Where are We and What’s Next - Bill Patakay, Oracle

- How to Get Started with Oracle ADF – What Resources are Out There? - Shay Shmeltzer and Lynn Munsinger, Oracle

- The Cloud and What it Means to Oracle ADF and Java Developers - Dana Singleterry, Oracle

- Going Mobile – What to Consider Before Starting a Mobile Project - Joe Huang, Oracle

- Understanding Fusion Middleware and ADF Integration - Frederic Desbiens, Lynn Munsinger, and Shay Shmeltzer, Oracle

- Open Q&A with the ADF Product Management

I love that they are opening up the floor to questions from their users. I wish more product teams would do that.

Application Express

- Oracle Database Tools - Mike Hichwa, Oracle

- Technology for the Database Cloud - Rick Greenwald, Oracle

- Developing Great User Interfaces with Application Express - Shakeeb Rahman, Oracle

- How Do We Build the APEX Builder? - Vlad Uvarov, Oracle

- How to Fully Utilize RESTful Web Services with Application Express - John Snyders, Oracle

- Update from APEX Development - Joel Kallman, Oracle

(If you see Joel Kallman out and about, make sure you you mispronounce APEX). This is a fantastic group of people (minus Joel of course). Not mentioned above is the affable David Peake who helps put all this together. The community surrounding APEX is second-to-none.

Finally, The Database and Developer's Toolkit. I'm partial to this one because I've been involved in the database track for the past couple of years. Like last year, this one is being put together by Kris Rice of Oracle. There are no session or abstract details for this one as it will be based mainly on the upcoming 12c release of the database. However, we do have the list of speakers lined up. If you could only come for one day of this conference, Sunday would be the day and this symposium would be the one you would attend.

This symposium will start off with Mike Hichwa (above) and then transition to the aforementioned (too many big words tonight) Mr. Rice. He'll be accompanied by Jeff Smith of SQL Developer fame, Maria Colgan from the Optimzer team and Tom Kyte.

How'd we do? I think pretty darn good.

Don't forget to sign up. Early Bird Registration ends on March 25, 2013. Save $300.

Thursday, February 21, 2013

Run Scripts in SQL Developer

I finally decided to save a script that cleans out a couple of tables for me.

Now I have a script, how do I run it in SQL Dev? In SQL*Plus, I would run it like @clean_tables. Two things to note there, 1, I didn't have to put the extension on the file and b, I assumed SQL*Plus was running from the directory where my file was located. If I was running the script from a different directory, I would have to use either a relative path...or something, but I digress.